Long ago, people believed that the eye emitted invisible rays that struck the world outside, causing it to become visible to the beholder. That’s not the case, of course, but that doesn’t mean it wouldn’t be a perfectly good way to see. In fact, it’s the basic idea behind lidar, a form of digital imaging that’s proven very useful in everything from archaeology to autonomous cars.

Lidar is a sort-of acronym that may or may not be capitalized when you see it, and it usually stands for “light detection and ranging,” though sometimes people like to fit “imaging” between the first two words. That it sounds similar to sonar and radar is no coincidence; they all operate on the same principle: echolocation. Hey, if bats can do it, why can’t we?

Seeing with echoes

The basic idea is simple: you shoot something out into the world, then track how long it takes to come back. Bats use sound waves — a little click or squeak that bounces off the environment and returns to their ears sooner or later depending on how far away a tree or bug is. Little imagination is needed to turn that into sonar, which just sends a bigger click into the surrounding water and listens for echoes. If you know exactly how fast sound travels in water, and you know exactly how long it took to hit something and come back, then you know exactly how far away whatever it hit is.

The entire article probably could have just been this gif.

From there, it’s not much of a jump to radar, which does much the same thing with radio waves. The radar dishes we’ve all seen at airports and on big boats spin around, firing out a beam of radio waves. When those waves hit something solid — especially something metallic, like a plane — they bounce back and the dish detects them. And since we know (among other things) the exact speed of light in our atmosphere, we can calculate how distant the plane is.

Now, radio waves are great for finding solid things over long distances, but they have some shortcomings. For instance, they pass right through some stuff, and because of the physics involved, radar can be tricky to use at lengths below a hundred feet or so. Meanwhile, sound waves have the opposite problem: they dissipate quickly and travel relatively slowly, making them unsuited to finding anything more than a dozen feet away.

Lidar is a happy medium. Lidar systems use lasers to send out pulses of light just outside the visible spectrum and time how long it takes each pulse to return. When it does, the direction of and distance to whatever that specific pulse hit are recorded as a point in a big 3D map with the lidar unit at the center. Different lidar units have different methods, but generally they sweep in a circle like a radar dish, while simultaneously moving the laser up and down.

When lidar was first proposed in the 1960s, lasers and detection mechanisms were bulky and slow to operate. Today, thanks to shrinking electronics and the great speed of our computers, a lidar unit may send out and receive millions of pulses per second and complete hundreds of revolutions per second.

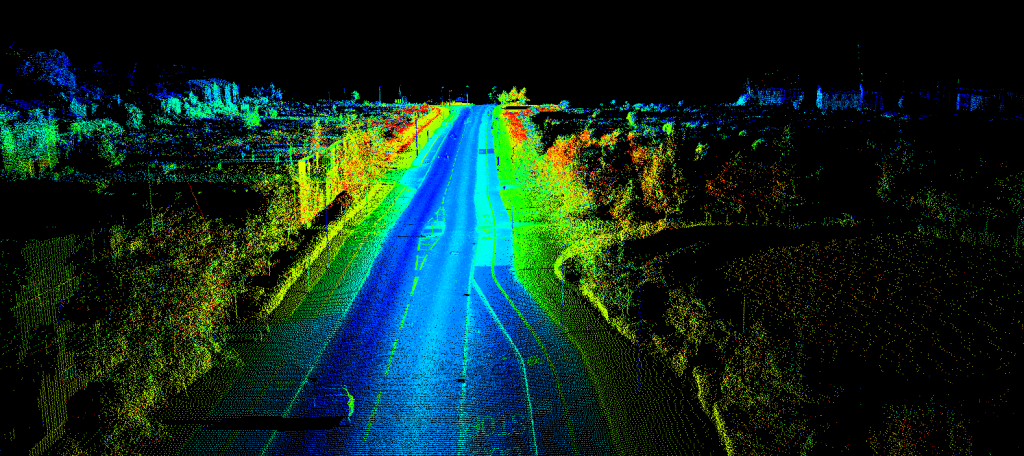

The resulting “point cloud,” as the collection of coordinates from returning laser pulses is called, can be remarkably detailed (and beautiful), showing all the contours of the environment and objects within it.

Lidar units on planes and helicopters can survey the terrain below quickly and accurately during flight; archaeologists can record every detail of a site down to the inch by sending a lidar unit through it; and most recently, a computer vision system in an autonomous car or robot can instantly acquaint itself with its surroundings.

Lidar units on planes and helicopters can survey the terrain below quickly and accurately during flight; archaeologists can record every detail of a site down to the inch by sending a lidar unit through it; and most recently, a computer vision system in an autonomous car or robot can instantly acquaint itself with its surroundings.

Eye on the road

That happy medium that lidar found happens to be especially suited to the last use case: navigating the everyday world. We humans have sophisticated methods of extracting depth data by comparing the images from our two slightly offset visual fields, but if we could shoot lasers out of our eyes instead and count the nanoseconds until they return, that might be even better.

Related Articles

WTF is computer vision? WTF is a mirrorless camera? WTF is AI?This video is rather old, but it gives a good idea of what the car would see around it with a lidar system:

Lidar doesn’t do everything, though: it can’t, for example, read the letters on a sign since they’re flat. And the systems can be disrupted relatively easily if there’s limited visibility: heavy snow, fog, or something obstructing the unit’s view. So lidar has to work in concert with other systems: its cousins radar and sonar (ultrasound, actually) and ordinary visible-light cameras as well. Lidar might not be able to read a “bump” sign on its own, but a camera might not realize it’s there until too late; by working as a team, they arguably have a better awareness of the immediate environment than a human being.

This world of high-speed, high-precision computer perception is a complex and quickly evolving one, so lidar’s role may change as other technologies supersede it or expand its capabilities. But it seems likely that the ancient notion of vision, wrong as it was in 500 BC, is now here to stay.

Featured Image: Bryce Durbin

No comments:

Post a Comment